In our latest installment of “Industries on the Edge,” we’ll explore a scene of massive innovation and transformation in an industry that’s become a catalyst for change in all others. An industry that signals its unprecedented agility with terms of art like “continuous improvement”, “continuous integration” and “continuous deployment”.

It’s also an industry being driven to the edge by a set of fundamental issues within, many of them direct consequences of the aforementioned continuous pace of change.

Of course, I refer to the software industry, which has been transformed over the last decade by the Agile philosophy and associated methodologies. And yet, these methodologies manifest unique challenges at a certain scale, challenges which are proving to be a major driver of rising R&D costs throughout the industry.

To understand why, we have to understand how large software is made in the modern era.

Large software projects are often highly distributed efforts, with fragmented teams building and testing applications the world over. The software in question might be the latest game from a major studio or the operating systems powering components of the world’s most prolific and critical infrastructure. But whatever the effort, the industry as a whole is generally beset by a plague of rising costs as these projects expand in scope, putting pressure on engineering and devops teams across every phase of development.

Greater efficiency is a natural means of combating these costs. And so, the focus shifts to the edge of the network, where the human capital of software engineering (and 95% of the cost) is invariably located.

The fastest data on your network is the data you already have

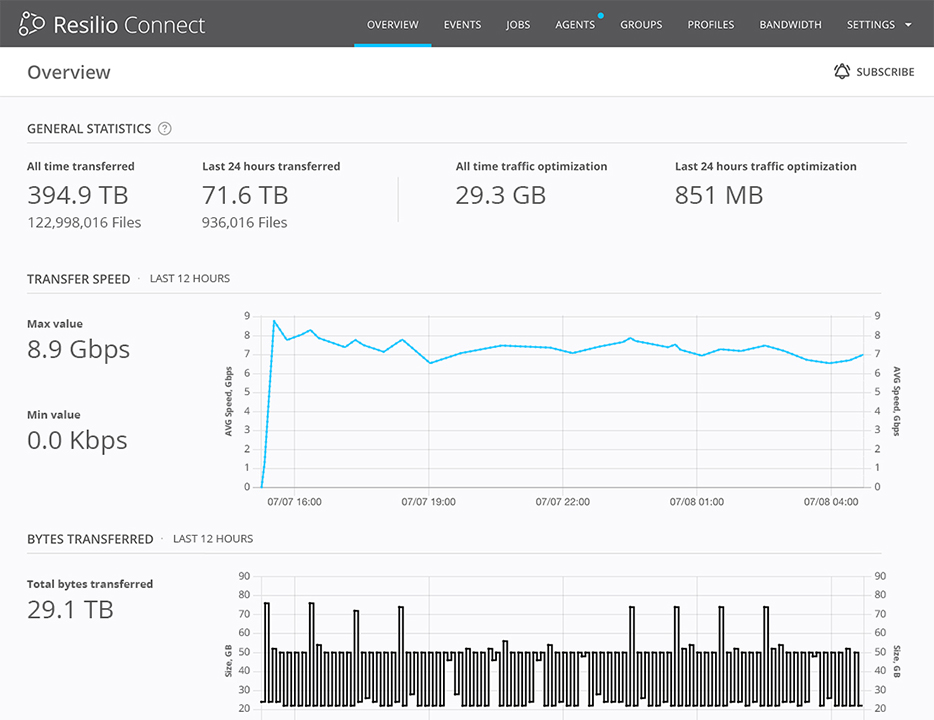

It was inevitable that the centralized models underpinning traditional software development and testing would eventually break down in the face of expanding scale. For large and complex projects, it’s not uncommon to move multiple TBs of builds and associated binaries each day to thousands of engineers and QA professionals all over the world. At that scale, the aggregate data becomes too big and the existing infrastructure becomes unmanageable and poorly utilized, leading to inefficient operations and developer “downtime” for anyone waiting for access to data.

Breaking free from point to point bottlenecks (like the Cloud) and embracing Hybrid or even distributed solutions at the edge allows more data to be accessed locally. As with other Industries on the Edge, we know that local data (partial or complete) is significantly faster and more reliable than pulling it from another system, even a local cloud or a local storage array.

In fact, the only way to fundamentally beat the speed of light on the network is to leverage the data you already have at the edge, though effective data reuse, accessing unchanged blocks from an earlier build on your disk or getting new pieces of the build from the Xbox next to you on the LAN.

Thus, extending the hybrid cloud concept all the way down to the edge means advancements in distributed software placed on existing edge nodes (e.g. desktops, test machines or gaming consoles) intelligently leverage idle and underutilized local infrastructure to speed the deployment of large builds.

What’s more, local data is not only faster, but also far more resilient, enabling continued operations even in the face of significant infrastructure outages. If the local storage array goes down, that’s no problem, as many more copies are always available elsewhere on the edge. More reliable and resilient systems mean less time spent in “break-fix” mode, reducing and even eliminating costly downtime.

In the end, utilizing a fully meshed solution means less storage sprawl to manage at each location, freeing Devops from trying to squeeze impossible levels of performance out of legacy and bloated storage solutions. It means less latency and more efficient distribution of large builds and binaries.

In short, it means a more efficient team and a vastly improved ROI.

Conclusion

The primary tenets of hybrid cloud and edge computing are local data and local processing. Resilio’s innovative distributed software allows any team to create a local edge or hybrid cloud instance using existing resources, producing solutions that are more scalable, resilient and efficient. Faced with rising R&D costs driven by rapidly expanding scale and complexity, it’s little wonder the world’s largest software projects have been among the first to embrace these innovative edge solutions, firmly establishing software engineering among today’s leading “Industries on the Edge.”